Hi all

As many of you know, I am currently writing a book alongside some of the best of the community. Currently I am writing about developing functoids, and in doing this I have discovered that there are plenty of blog posts and helpful articles out there about developing functoids, but hardly any of them deal with developing cumulative functoids. So I thought the world might end soon if this wasn’t rectified.

Developing functoids really isn’t as hard as it might seem. As I have explained numerous times, I consider creating a good icon as the most difficult part of it :—)

When developing custom functoids, you need to choose between developing a referenced functoid or an inline functoid. The difference is that a referenced functoid is a normal .NET assembly that is GAC’ed and called from the map at runtime requiring it to be deployed on all BizTalk Servers that have a map that use the functoid. Inline functoids on the other hand output a string containing the method and this method is put inside the XSLT and called from there.

There are ups and downs to both – my preference usually goes towards the referenced functoid… not because of the reasons mentioned on MSDN, but simply because I can’t be bothered creating a method that outputs a string that is a method. It just looks ugly :)

So, in this blog post I will develop a custom cumulative functoid that generates a comma delimited string based on a reoccurring node as input.

First, the functionality that is needed for both referenced and inline functoids

All functoids must inherit from the BaseFunctoid class which is found in the Microsoft.BizTalk.BaseFunctoids namespace which is usually found in <InstallationFolder>\Developer Tools\Microsoft.BizTalk.BaseFunctoids.dll.

Usually a custom functoid consists of:

- A constructor that does almost all the work and setting up the functoid

- The method that should be called at runtime for a referenced functoid or a method that returns a string with a method for inline functoids.

- Resources for name, tooltip, description and icon

A custom cumulative functoid consists of the same but also has a data structure to keep aggregated values in and it has two methods more to specify. The reason a cumulative functoid has three methods instead of one is that the first is called to initialize the data structure, the second is called once for every occurrence of the input node and the third is called to retrieve the aggregated value.

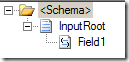

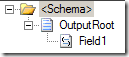

To exemplify, I have created two very simple schemas:

Source schema.

Source schema.

Destination schema.

Destination schema.

The field “Field1” in the source schema has a maxOccurs = unbounded and the field “Field1” in the destination schema has maxOccurs = 1.

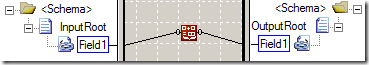

I have then created a simple map between them:

The map merely utilizes the built-in “Cumulative Concatenate” functoid to get all occurrences of “Field1” in the source schema concatenated into one value that is output to the “Field1” node in the output.

The generated XSLT looks something like this:

1: <xsl:template match="/s0:InputRoot">

2: <ns0:OutputRoot>

3: <xsl:variable name="var:v1" select="ScriptNS0:InitCumulativeConcat(0)" />

4: <xsl:for-each select="/s0:InputRoot/Field1">

5: <xsl:variable name="var:v2" select="ScriptNS0:AddToCumulativeConcat(0,string(./text()),"1000")" />

6: </xsl:for-each>

7: <xsl:variable name="var:v3" select="ScriptNS0:GetCumulativeConcat(0)" />

8: <Field1>

9: <xsl:value-of select="$var:v3" />

10: </Field1>

11: </ns0:OutputRoot>

12: </xsl:template>

As you can see, the “InitCumulativeConcat” is called once, then “AddToCumulativeConcat is called for each occurrence of “Field1” and finally “GetCumulativeConcat” is called and the value is inserted into the “Field1” node of the output.

So, back to the code needed for all functoids. It is basically the same as normal functoids:

1: public class CummulativeComma : BaseFunctoid

2: {

3: public CummulativeComma() : base()

4: {

5: this.ID = 7356;

6:

7: SetupResourceAssembly(GetType().Namespace + "." + NameOfResourceFile, Assembly.GetExecutingAssembly());

8:

9: SetName("Str_CummulativeComma_Name");

10: SetTooltip("Str_CummulativeComma_Tooltip");

11: SetDescription("Str_CummulativeComma_Description");

12: SetBitmap("Bmp_CummulativeComma_Icon");

13:

14: this.SetMinParams(1);

15: this.SetMaxParams(1);

16:

17: this.Category = FunctoidCategory.Cumulative;

18: this.OutputConnectionType = ConnectionType.AllExceptRecord;

19:

20: AddInputConnectionType(ConnectionType.AllExceptRecord);

21: }

22: }

Basically, you need to:

- Set the ID of the functoid to a unique value that is greater than 6000. Values smaller than 6000 are reserved for BizTalks own functoids.

- Call SetupResourceAssembly to let the base class know what resource file to get resources from

- Call SetName, SetTooltip, SetDescription and SetBitmap to let the base class get the appropriate resources from the resource file. Remember to add the appropriate resources to the resource file.

- Call SetMinParams and SetMaxParams to determine how many parameters the functoid can have. They should be set to 1 and 2 respectively. The first is for the reoccurring node and the second is a scoping input.

- Set the category of the functoid to “Cumulative”

- Determine both the type of nodes/functoids the functoid can get input from and what it can output to.

I won’t describe these any more right now. They are explained in more details in the book :) And also, there are plenty of posts out there about these emthods and properties.

Now for the functionality needed for a referenced functoid:

Beside what you have seen above, for a referenced functoid, the three methods must be written and referenced. This is done like this:

1: SetExternalFunctionName(GetType().Assembly.FullName, GetType().FullName, "InitializeValue");

2: SetExternalFunctionName2("AddValue");

3: SetExternalFunctionName3("RetrieveFinalValue");

The above code must be in the constructor along with the rest. Now, all that is left is to write the code for those three methods, which can look something like this:

1: private Hashtable myCumulativeArray = new Hashtable();

2:

3: public string InitializeValue(int index)

4: {

5: myCumulativeArray[index] = "";

6: return "";

7: }

8:

9: public string AddValue(int index, string value, string scope)

10: {

11: string str = myCumulativeArray[index].ToString();

12: str += value + ",";

13: myCumulativeArray[index] = str;

14: return "";

15: }

16:

17: public string RetrieveFinalValue(int index)

18: {

19: string str = myCumulativeArray[index].ToString();

20: if (str.Length > 0)

21: return str.Substring(0, str.Length - 1);

22: else

23: return "";

24: }

So, as you can see, a data structure (in this case a Hashtable) is declared to store the aggregated results and all three methods have an index parameter that is used to know how to index the data structure for each method call in case the functoid is used multiple times at the same time. The mapper will generate a unique index to be used.

Compile the project, copy the DLL to “<InstallationFolder>\Developer Tools\Mapper Extensions” and GAC the assembly and you are good to go. Just reset the toolbox to load the functoid.

Now for the functionality needed for an inline functoid:

The idea behind a cumulative inline functoid is the same as for a cumulative referenced functoid. You still need to specify three methods to use. For an inline functoid you need to generate the methods that will be included in the XSLT, though.

For the constructor, add the following lines of code:

1: SetScriptGlobalBuffer(ScriptType.CSharp, GetGlobalScript());

2: SetScriptBuffer(ScriptType.CSharp, GetInitScript(), 0);

3: SetScriptBuffer(ScriptType.CSharp, GetAggScript(), 1);

4: SetScriptBuffer(ScriptType.CSharp, GetFinalValueScript(), 2);

The first method call sets a script that will be global for the map. In this script you should initialize the needed data structure.

The second method call sets up the script that will initialize the data structure for a given instance of the functoid.

The third method call sets up the script that will add a value to the aggregated value in the data structure.

The fourth method call sets up the script that is used to retrieve the aggregated value.

As you can see, the second, third and fourth line all call the same method. The last parameter is used to let the functoid know if it is the initialization, aggregating or retrieving method that is being setup.

So, what is left is to implement these four methods. The code for this can look quite ugly, since you need to build a string and output it, but it goes something like this:

1: private string GetFinalValueScript()

2: {

3: StringBuilder sb = new StringBuilder();

4: sb.Append("\npublic string RetrieveFinalValue(int index)\n");

5: sb.Append("{\n");

6: sb.Append("\tstring str = myCumulativeArray[index].ToString();");

7: sb.Append("\tif (str.Length > 0)\n");

8: sb.Append("\t\treturn str.Substring(0, str.Length - 1);\n");

9: sb.Append("\telse\n");

10: sb.Append("\t\treturn \"\";\n");

11: sb.Append("}\n");

12: return sb.ToString();

13: }

14:

15: private string GetAggScript()

16: {

17: StringBuilder sb = new StringBuilder();

18: sb.Append("\npublic string AddValue(int index, string value, string scope)\n");

19: sb.Append("{\n");

20: sb.Append("\tstring str = myCumulativeArray[index].ToString();");

21: sb.Append("\tstr += value + \",\";\n");

22: sb.Append("\tmyCumulativeArray[index] = str;\n");

23: sb.Append("\treturn \"\";\n");

24: sb.Append("}\n");

25: return sb.ToString();

26: }

27:

28: private string GetInitScript()

29: {

30: StringBuilder sb = new StringBuilder();

31: sb.Append("\npublic string InitializeValue(int index)\n");

32: sb.Append("{\n");

33: sb.Append("\tmyCumulativeArray[index] = \"\";\n");

34: sb.Append("\treturn \"\";\n");

35: sb.Append("}\n");

36: return sb.ToString();

37: }

38:

39: private string GetGlobalScript()

40: {

41: return "private Hashtable myCumulativeArray = new Hashtable();";

42: }

I suppose, by now you get why I prefer referenced functoids?  You need to write the method anyway in order to check that it compiles. Wrapping it in methods that output the strings is just plain ugly.

You need to write the method anyway in order to check that it compiles. Wrapping it in methods that output the strings is just plain ugly.

Conclusion

As you can hopefully see, developing a cumulative functoids really isn’t that much harder than developing a normal functoid. Just a couple more methods. I did mention that I usually prefer the referenced functoids because of the uglyness of creating inline functoids. For cumulative functoids, however, you should NEVER use referenced functoids but instead only use inline functoids. The reason for this is quite good, actually – and you can see it in my next blog post which will come in a day or two.

Thanks

--

eliasen