Hi all

I just did a post on developing a custom cumulative functoid. You can find it here: http://blog.eliasen.dk/2010/01/13/DevelopingACustomCumulativeFunctoid.aspx

At the very end of the post I write that you should NEVER develop a custom referenced cumulative functoid but instead develop a custom inline cumulative functoid. Given the title of this blog post, probably by now you know why this is

When I developed my first cumulative functoid, I developed a referenced functoid, since this is what I prefer. I tested it on an input and it worked fine. Then I deployed it and threw 1023 copies of the same message through BizTalk at the same time. My test solution had two very simple schemas:

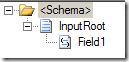

Source schema.

Source schema.

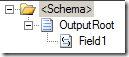

Destination schema.

Destination schema.

The field “Field1” in the source schema has a maxOccurs = unbounded and the field “Field1” in the destination schema has maxOccurs = 1.

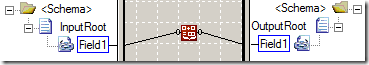

I then created a simple map between them:

The map merely utilizes my “Cumulative Comma” functoid (Yes, I know the screen shot is of another functoid. Sorry about that…  ) to get all occurrences of “Field1” in the source schema concatenated into one value separated by commas that is output to the “Field1” node in the output.

) to get all occurrences of “Field1” in the source schema concatenated into one value separated by commas that is output to the “Field1” node in the output.

My 1023 test instanced all have 10 instances of the “Field1” in the input, so all output XML should have these ten values in a comma separated list in the “Field1” element in the output schema.

Basically, what I found was, that it was quite unpredictable what the outcome of that was. Some of the output XML has a completely empty “Field1” element. Others had perhaps 42 values in their comma separated list. About 42% of the output files had the right number of fields in the comma separated list, but I don’t really trust they are the right values…

Anyway, I looked at my code, and looked again… couldn’t see anything wrong. So I thought I’d try with the cumulative functoids that ship with BizTalk. I replaced my functoid with the built-in “Cumulative Concatenate” functoid and did the same test. The output was just fine – nothing wrong. This baffled me a bit, but then I discovered that the cumulative functoids that ship with BizTalk are actually developed so they can be used as BOTH referenced functoids and inline functoids. Which one is used depends on the value of the “Script Type Precedence” property on the map. By default, inline C# has priority, so the built-in “Cumulative Concatenate” functoid wasn’t used as a referenced functoid as my own functoid was. I changed the property to have “External Assembly” as first priority and checked the generated XSLT to make sure that now it was using the functoid as a referenced functoid. It was. So I deployed and tested… and guess what?

I got the same totally unpredictable output as I did with my own functoid!

So the conclusion is simple; The cumulative functoids that ship with BizTalk are NOT thread safe, when used as referenced functoids. As a matter of fact, I claim that it is impossible to write a thread safe referenced cumulative functoid, for reasons I will now explain.

When using a referenced cumulative functoid, the generated XSLT looks something like this:

1: <xsl:template match="/s0:InputRoot">

2: <ns0:OutputRoot>

3: <xsl:variable name="var:v1" select="ScriptNS0:InitCumulativeConcat(0)" />

4: <xsl:for-each select="/s0:InputRoot/Field1">

5: <xsl:variable name="var:v2" select="ScriptNS0:AddToCumulativeConcat(0,string(./text()),"1000")" />

6: </xsl:for-each>

7: <xsl:variable name="var:v3" select="ScriptNS0:GetCumulativeConcat(0)" />

8: <Field1>

9: <xsl:value-of select="$var:v3" />

10: </Field1>

11: </ns0:OutputRoot>

12: </xsl:template>

As you can see, the “InitCumulativeConcat” is called once, then “AddToCumulativeConcat is called for each occurrence of “Field1” and finally “GetCumulativeConcat” is called and the value is inserted into the “Field1” node of the output.

In order to make sure the functoid can distinguish between instances of the functoid, there is an “index” parameter to all three methods, which the documentation states is unique for that instance. The issue here is, that this is only true for instances within the same map and not across all instances of the map. As you can see in the XSLT, a value of “0” is used for the index parameter. If the functoid was used twice in the same map, a value of “1” would be hardcoded in the map for the second usage of the functoid and so on.

But if the map runs 1000 times simultaneously, they will all send a value of “0” to the functoids methods. And since the functoid is not instantiated for each map, but rather the same object is used across all the maps, there will a whole lot of method calls with the value “0” for the index parameter without the functoid having a clue as to which instance of the map is calling it, basically mixing everything up good.

The reason it works for inline functoids is, of course, that there is no object to be shared across map instances – it’s all inline for each map… so here the index parameter is actually unique and things work.

And the reason I cannot find anyone on the internet having described this before me (This issue must have been there since BizTalk 2004) is probably that the default behavior of maps is to use the inline functionality if present, then probably no one has ever changed that property at the same time as having used a cumulative functoid under high load.

What is really funny is, that the only example of developing a custom cumulative functoid I have found online is at MSDN: http://msdn.microsoft.com/en-us/library/aa561338(BTS.10).aspx and the example is actually a custom referenced cumulative functoid… which doesn’t work, because it isn’t thread safe. Funny, eh?

So, to sum up:

Never ever develop a custom cumulative referenced functoid – use the inline versions instead. I will have o update the one at http://eebiztalkfunctoids.codeplex.com right away :)

Good night…

--

eliasen