Hi all

Today I encountered something I have never seen before, when creating a map.

The issue occurs because my customer had a schema that imports two other schemas, both of which have an element called “metadata” – but naturally the two schemas have different target namespaces.

The main schema imports both, and has two records just below the root, and these two records reference each of the two metadata elements in the two imported schemas.

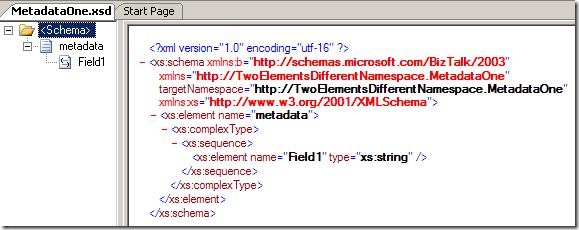

So the first schema could look like this:

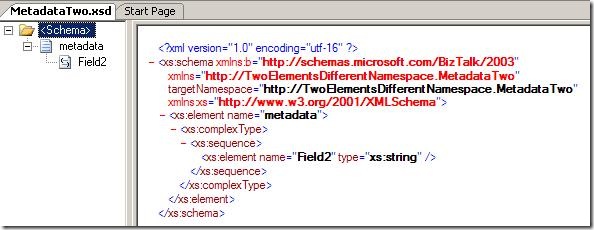

And the second schema could look like this:

So both have an element named “metadata” but one is in the namespace “http://TwoElementsDifferentNamespace.MetadataOne” and the other is in the namespace “http://TwoElementsDifferentNamespace.MetadataTwo”.

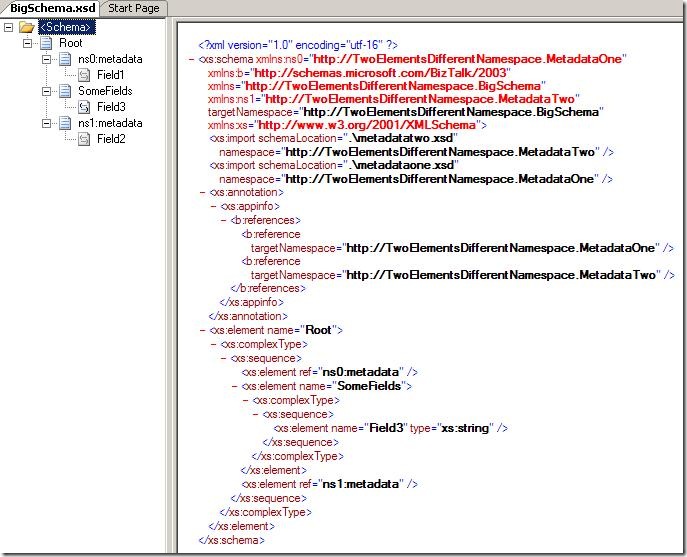

After that, I create the schema that impors both:

As you can see it imports the first two schemas, and has to elements that reference each of the metadata elements form the two first schemas.

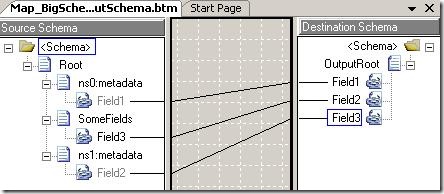

Also, I crated an output schema that just has three elements and then I created this map:

Pretty simple. Now, the issue comes when compiling, because I get this error:

Exception Caught: The map contains a reference to a schema node that is not valid. Perhaps the schema has changed. Try reloading the map in the BizTalk Mapper. The XSD XPath of the node is: /*[local-name()='<Schema>']/*[local-name()='Root']/*[local-name()='metadata']/*[local-name()='Field2']

o the first try was to reload the schema – but that didn’t help – it just broke one of my links.

In he end I found out, that the issue is that the links are stored like this in the .BTM file:

<Link LinkID="1" LinkFrom="/*[local-name()='<Schema>']/*[local-name()='Root']/*[local-name()='metadata']/*[local-name()='Field1']" LinkTo="/*[local-name()='<Schema>']/*[local-name()='OutputRoot']/*[local-name()='Field1']" Label="" />

<Link LinkID="2" LinkFrom="/*[local-name()='<Schema>']/*[local-name()='Root']/*[local-name()='SomeFields']/*[local-name()='Field3']" LinkTo="/*[local-name()='<Schema>']/*[local-name()='OutputRoot']/*[local-name()='Field2']" Label="" />

<Link LinkID="3" LinkFrom="/*[local-name()='<Schema>']/*[local-name()='Root']/*[local-name()='metadata']/*[local-name()='Field2']" LinkTo="/*[local-name()='<Schema>']/*[local-name()='OutputRoot']/*[local-name()='Field3']" Label="" />

So. basically, the .BTM file saves links as XPath expressions WITHOUT the namespaces. So naturally, this has to go wrong, when there are two “metadata” elements on the same level in the schema.

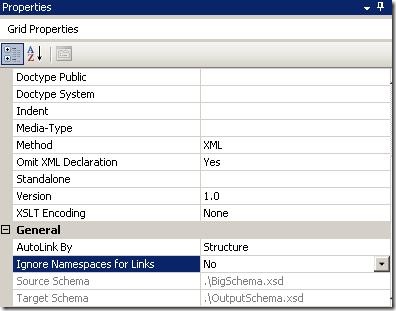

The way to solve this is to choose the properties of the map and disable the “Ignore Namespaces for Links” like this:

After setting this property, the links change having namespaces inside the .BTM file and everything is just fine.

One might wonder why the namespaces are not enabled as the default, since they do make the solution more robust. Well, the reason is simple; If the namespaces are in all the links, and you change for instance the namespace of the root node, then ALL links in the map gets broken. So actually, not having the namespaces in the links make the solution more robust.

So… I hope this can help someone.

You can download the solution here:

--

eliasen